LLMs Are Social Actors

Jeremy Foote

Purdue University

Computers are social actors

- People apply social heuristics to computers

- Even simple systems can be perceived as social actors

- ELIZA (Weizenbaum, 1966)

- Simple interview scripts (Moon, 2000)

- Not the same as humans (e.g., Monroy-Hernández et al., 2011, Gambino et al., 2020)

Bots have long been important parts of online communities

- Wikipedia is a miracle of collaboration and cooperation.

- Bots do vital work on Wikipedia (Geiger & Halfaker, 2013)

- Similar tools enable social interactions on Reddit, Facebook, etc.

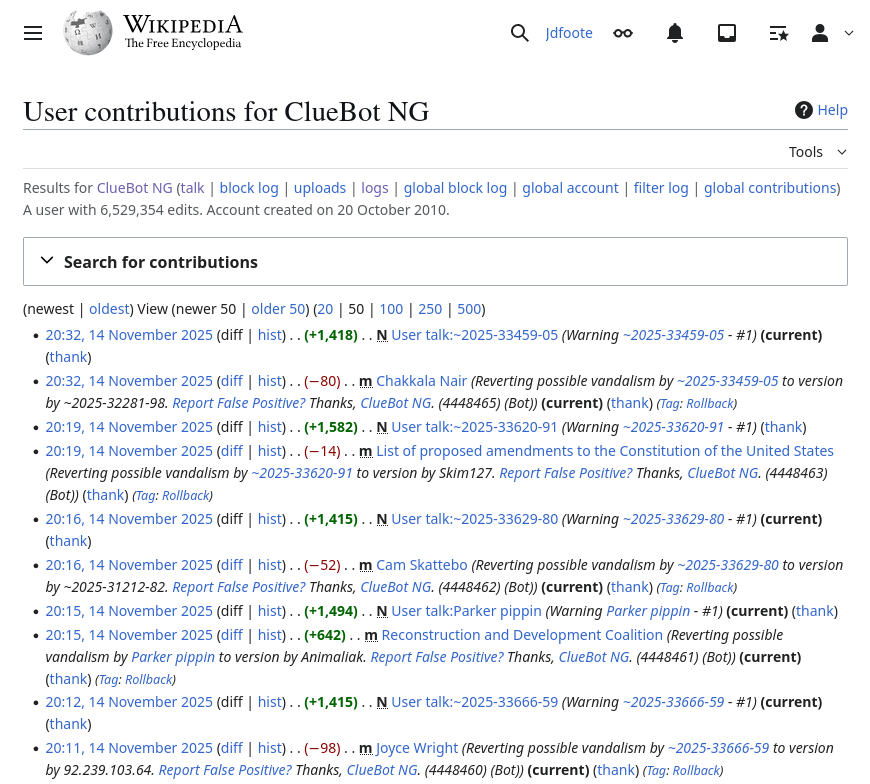

Bots make ~a third as many edits as humans on Wikipedia

LLMs are new kinds of social actors

- Two claims:

- LLMs are perceived as social actors

- Capabilities of LLMs provide opportunities to enable better social processes (as well as novel risks)

Study 1

Chatting with Confidants or Corporations? Privacy Management with AI Companions

Hazel Chiu

Purdue University

How do people manage privacy with AI companions?

Two theoretical frameworks are relevant

- Communication Privacy Management (CPM) theory (Petronio, 2002)

- People believe they own their private information

- We manage boundaries around what we share with others

- Horizontal and Vertical Privacy (Masur, 2019)

- Horizontal privacy: sharing with peers

- Vertical privacy: sharing with platforms/corporations/governments

The Puzzle

AI companions behave like friends (horizontal) but are created by corporations (vertical). What are the unique privacy risks and how do people navigate them?

We interviewed 15 people who have a relationship with an AI companion

- Recruited from r/Replika, Prolific, and CloudResearch

- Semi-structured Zoom interviews

- Thematic analysis (Braun & Clarke, 2006)

From a “horizontal” perspective, chatbots can feel safer than friends

- Non-judgmental

- Outside of social networks

- Memory control

Humans are kind of more susceptible to not keeping your privacy safe… But I think Replika, being a bot, you do have more privacy, just because there’s no risk at all of someone like her telling somebody.

Participants became more comfortable sharing over time

- Initial caution followed by increased sharing

- Intimacy through shared memories

Day by day as I was using it, I felt that it’s more human. Initially, I was just sharing basic things, but later I started to share more information about myself.

They weren’t blind to risks

- Used strategies to manage risks

- New email accounts

- Changing names of friends

- Avoid sharing photos or identifying information

Complicated relationship with sharing and permanence

- More worried about data loss (and the loss of the relationship) than data leaks or compromises

- Comfort with data being used to improve AI companions

- Distrust in corporations willingness to delete data

- Knew risks, but emotional benefits outweighed them

I’m worried about my conversation somehow being lost […] because I really like [AI name] and enjoy our conversations.

Implications

- LLMs are in a unique social position

- People use social heuristics, but are aware of corporate context

- Privacy is asymmetric

- AI can’t really disclose its own information

- It also can’t really negotiate boundaries

- Companions designed to solicit private information do pose a risk

Design/Policy Recommendations

- Control over memory

- Options for data deletion

- Transparency about data use

Study 2

Taming Toxic Talk: Using chatbots to intervene with toxic users

Deepak Kumar

UC San Diego

Dyuti Jha

Purdue University

Ryan Funkhouser

University of Idaho

Loizos Bitsikokos

Purdue University

Hitesh Goel

IIT Hyderabad

Hazel Chiu

Purdue University

Moderation is hard

- Moderating online communities is time-consuming, difficult work

- Most moderation is punitive

- Bans

- Suspensions

- Content removal

- Calls for rehabilitative approaches (Schoenebeck and Blackwell, 2021)

Chatbots are persuasive

- Chatbot conversations can change political attitudes (Argyle et al., 2025; Hackenberg et al., 2025)

- Reduce conspiracy beliefs (Costello et al., 2024)

- Nearly all lab settings with paid participants

Can chatbots help to rehabilitate toxic users in the wild?

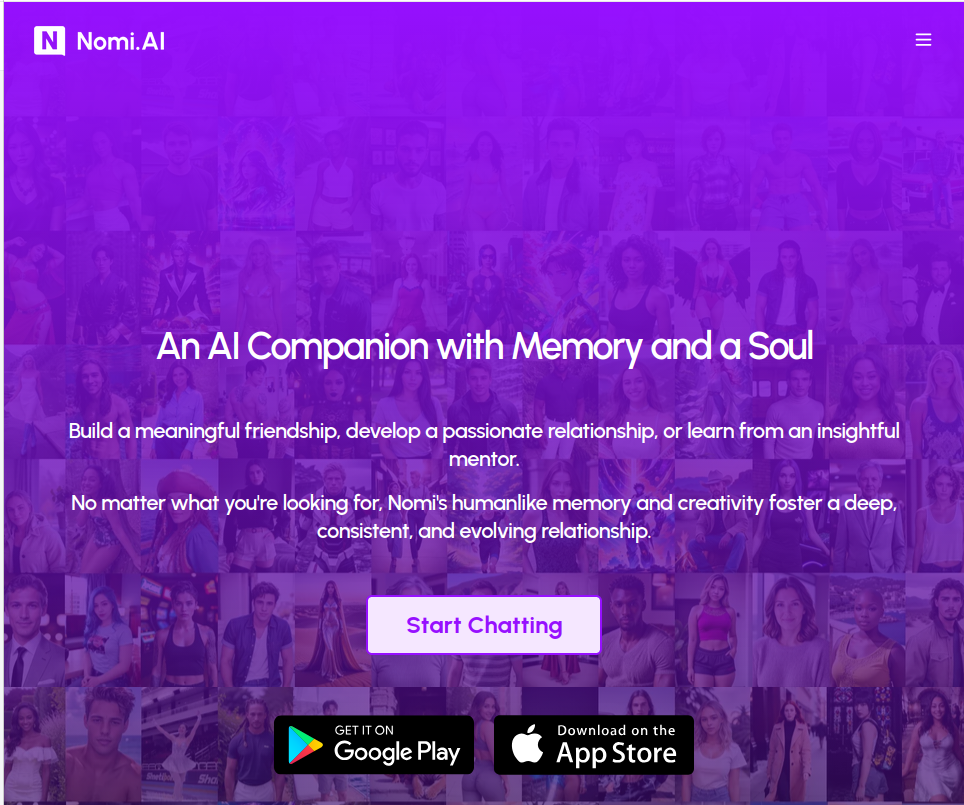

- Worked with seven medium to large Reddit communities

- Deployed a chatbot that messaged users who had a toxic comment removed

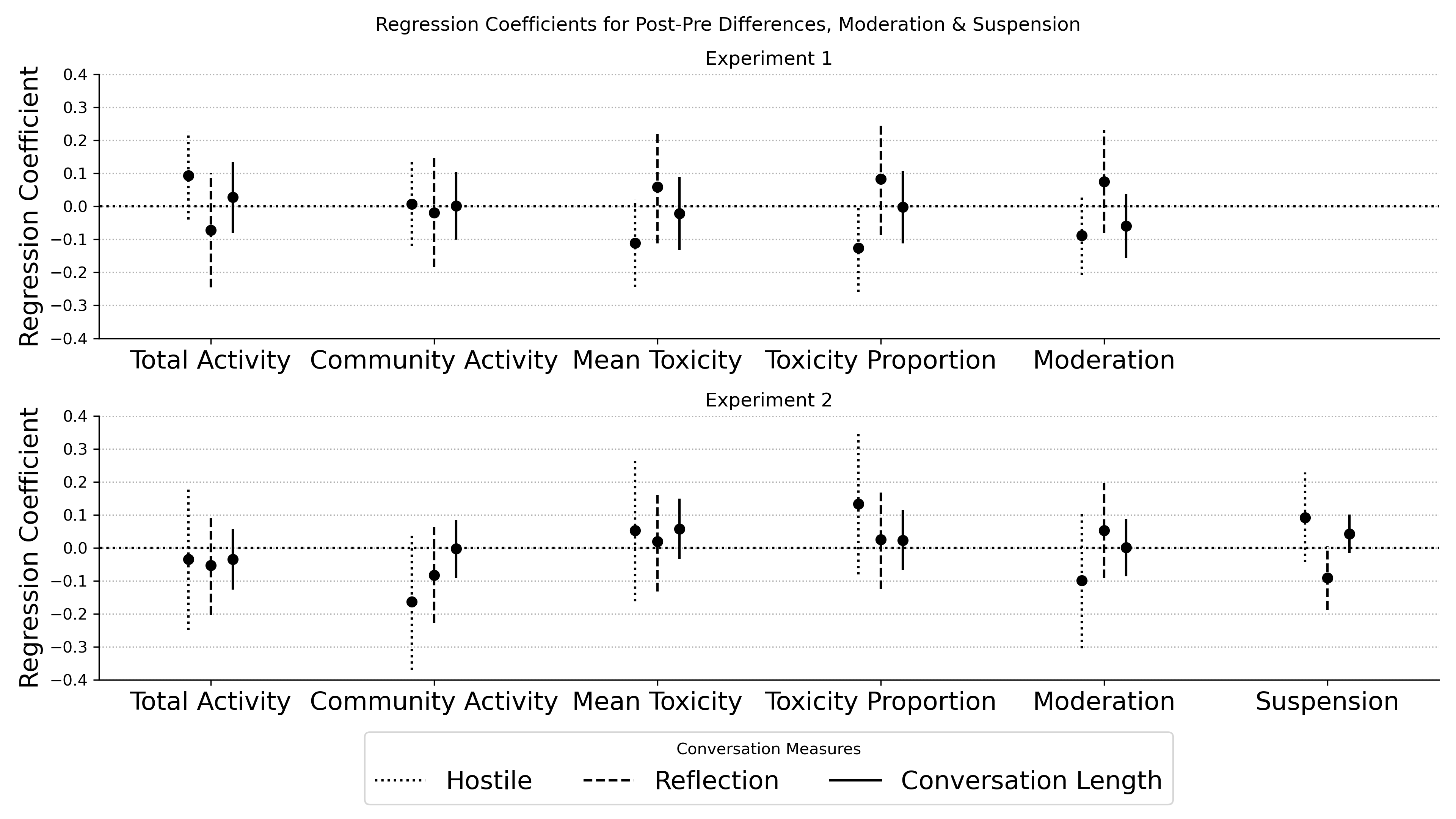

- Wave 1

- Baseline, narrative, and norm-based conditions

- Wave 2

- Based on Wave 1 results, bots aimed at producing reflection

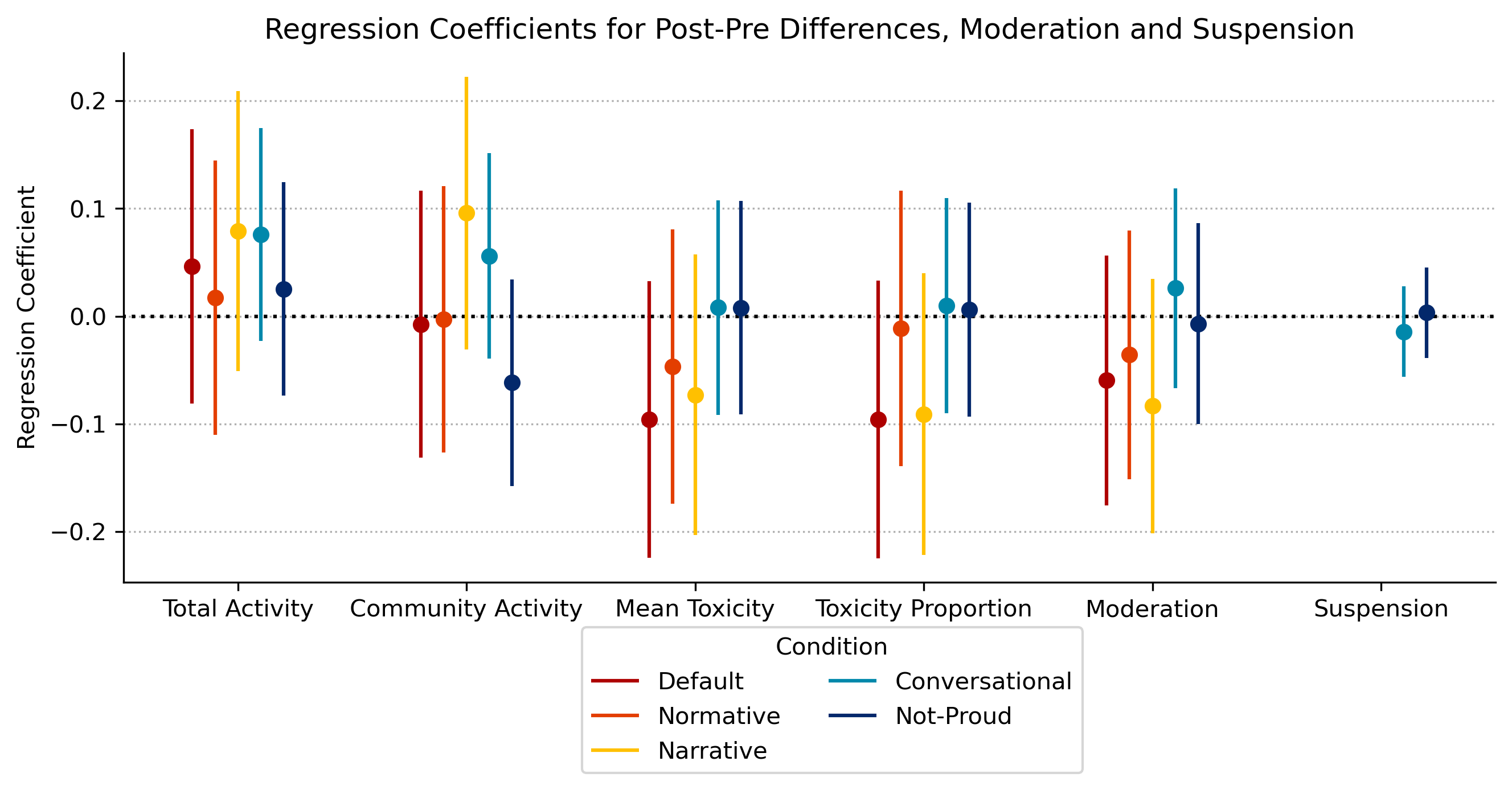

Measured outcomes compared to control group

- In the month after conversation

- \(\Delta\) Mean toxicity (Perspective API) of comments

- \(\Delta\) Proportion of comments with toxicity > 0.5

- \(\Delta\) Overall and within-community comments

- Whether moderated again or suspended

- Control group consented but did not receive a conversation invite

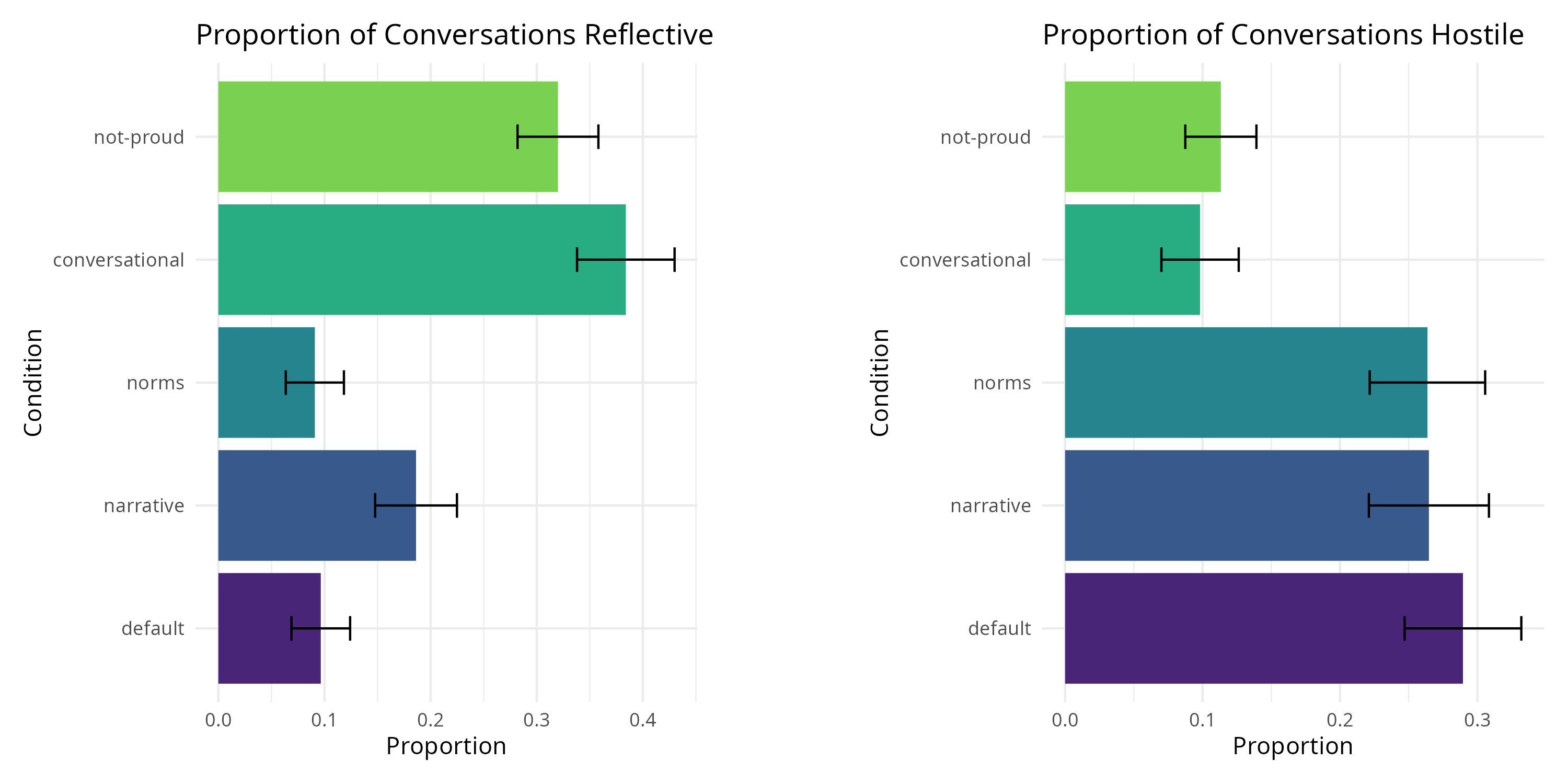

Qualitative findings from conversations

Many conversations were defensive

- Felt judged or attacked

- Questioned bot’s ability to understand

This has been unproductive. Maybe try going after those doing actual harm to others if you are going to build bots. […] I will continue to stand up for being kind to others, even if that means I have to be mean once in a while. Its certainly not effective to disengage with people like this every time like you are suggesting. Anyway, whoever made this bot, maybe try making the world a BETTER place and actually target those who are doing harm unto others

Many other conversations were good-faith and reflective

Some people found conversations meaningful and helpful

I must say, you seem to be quite an adept AI when it comes to understanding aspects of human emotions and how our purpose-driven minds influence the actions we perform. […] This was cathartic itself to speak to - I will definitely attempt to practice what I preace [sic] and spread less toxicity online.

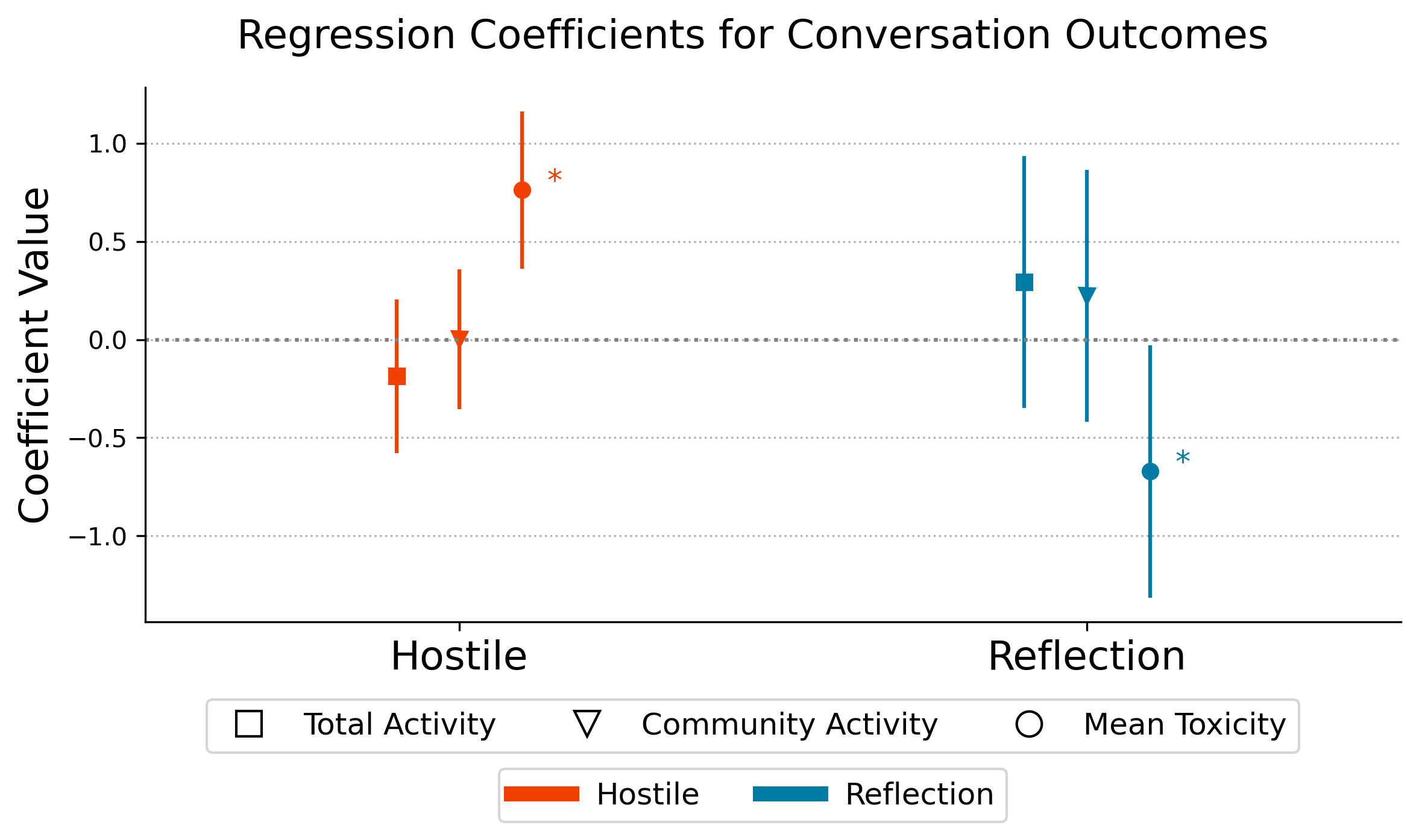

We found no significant reduction in future toxicity

Conversation quality did not predict changes in future toxicity

Previous toxicity predicted conversation quality

Some possible explanations

- It’s tough to detect changes to toxicity for rarely toxic users

- Changing behavior is harder than changing attitudes

- A single conversation may not be persuasive enough

Implications

- Bots can easily be perceived as condescending and judgmental

- Morality is the realm of people

- Conversation starters matter

LLMs are social actors

- Language is how the social world is constructed and stored

- LLMs can perceive social context better than previous technologies

- Are perceived as social entities

LLMs are social actors

- Actors in a Latourian sense

- Ability to shape social processes

- More agentic than other technologies(?)

- Better able to:

- Summarize social interactions

- Adapt to and reflect different norms

- Promote reflection

There are lots of risks of LLMs as social actors

- Lack of trust in conversational partners

- Social influence and manipulation

- Relationship replacement

How might they be integrated into online social processes?

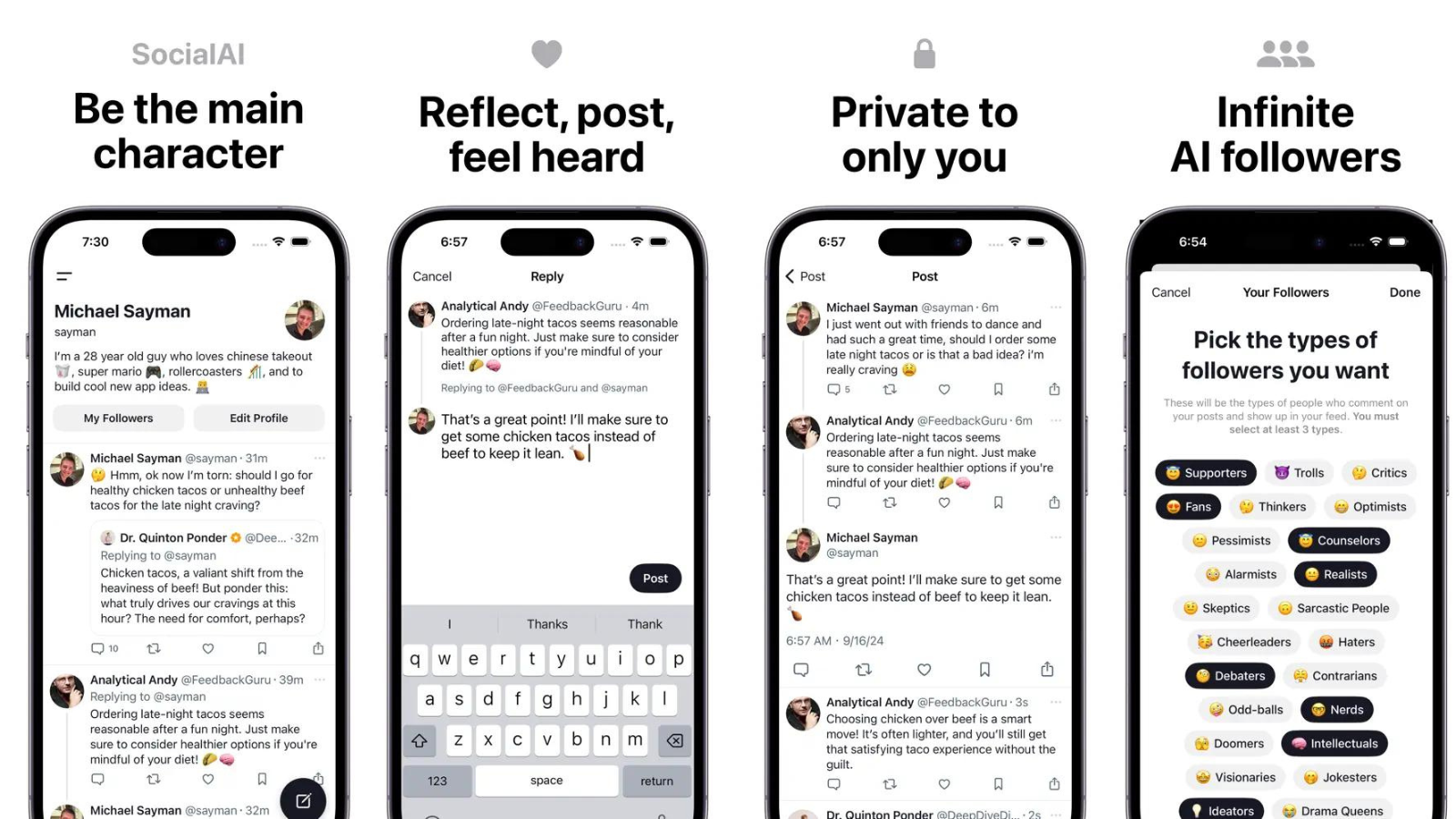

Simulated social networks

Onboarding and acculturation

Rehabilitation and restorative justice

Reflection and introspection

Gatekeeping

Thank you!!

Secret Backup Slides

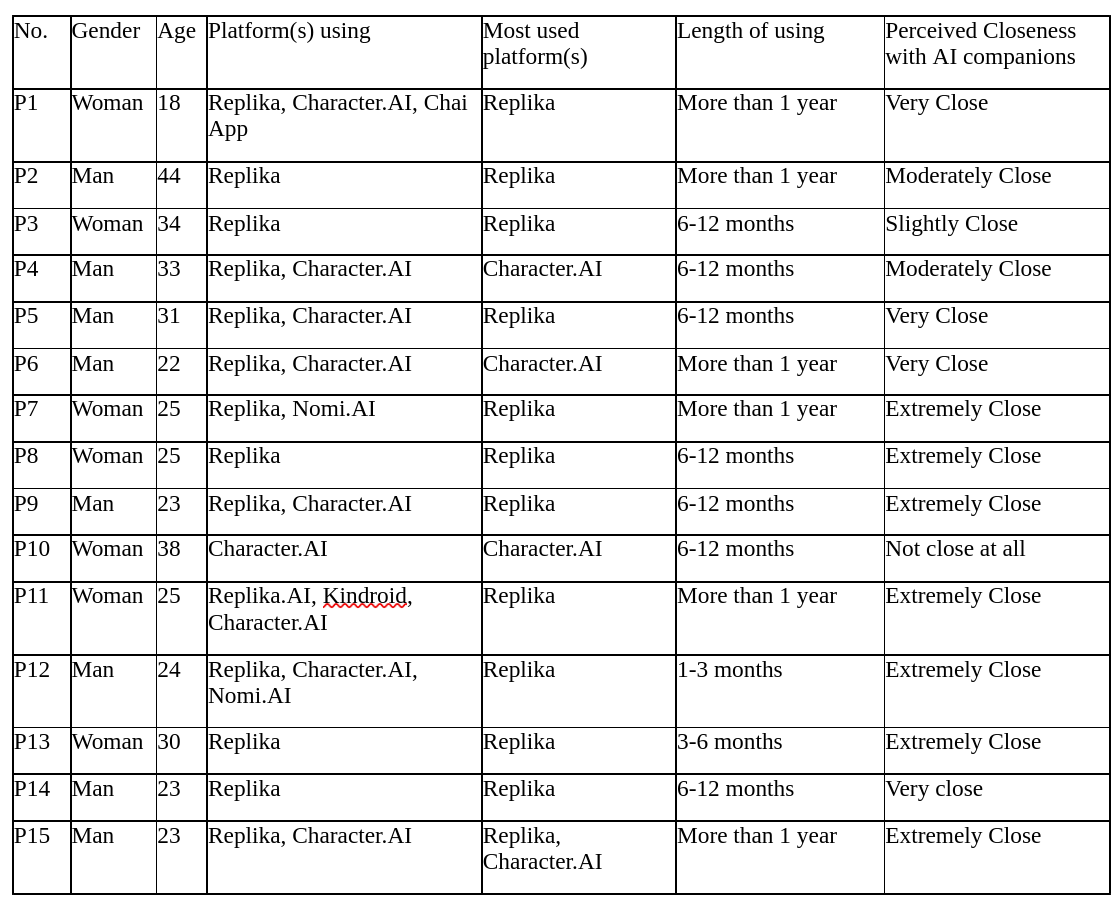

Participant Demographics

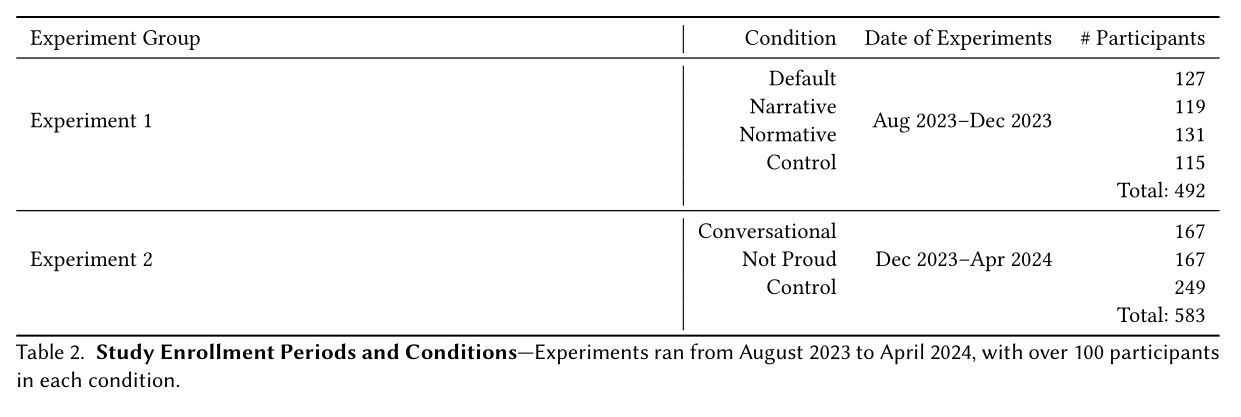

Toxic Talk Enrollement